To start off, this was one of those projects that I would never have even started if it wasn't for vibe coding. I signed up for Perplexity (they have 12 free months when you sign up with PayPal) a while back, and was using Claude Sonnet 4.5 for first validating the idea and then for guiding me through building my first n8n workflow and coding the more laborious data extraction loops. Last week began by signing up for DigitalOcean and deploying a Docker instance on which this whole thing would run. Then I fiddled around with some of the bits in the workflow builder and went to bed. But! Somehow miraculously I managed to come back to the project this week, and have spent three evenings on it, each time thinking that this would be the last one.

But I'm getting ahead of myself. It would probably make sense to go over the use case first.

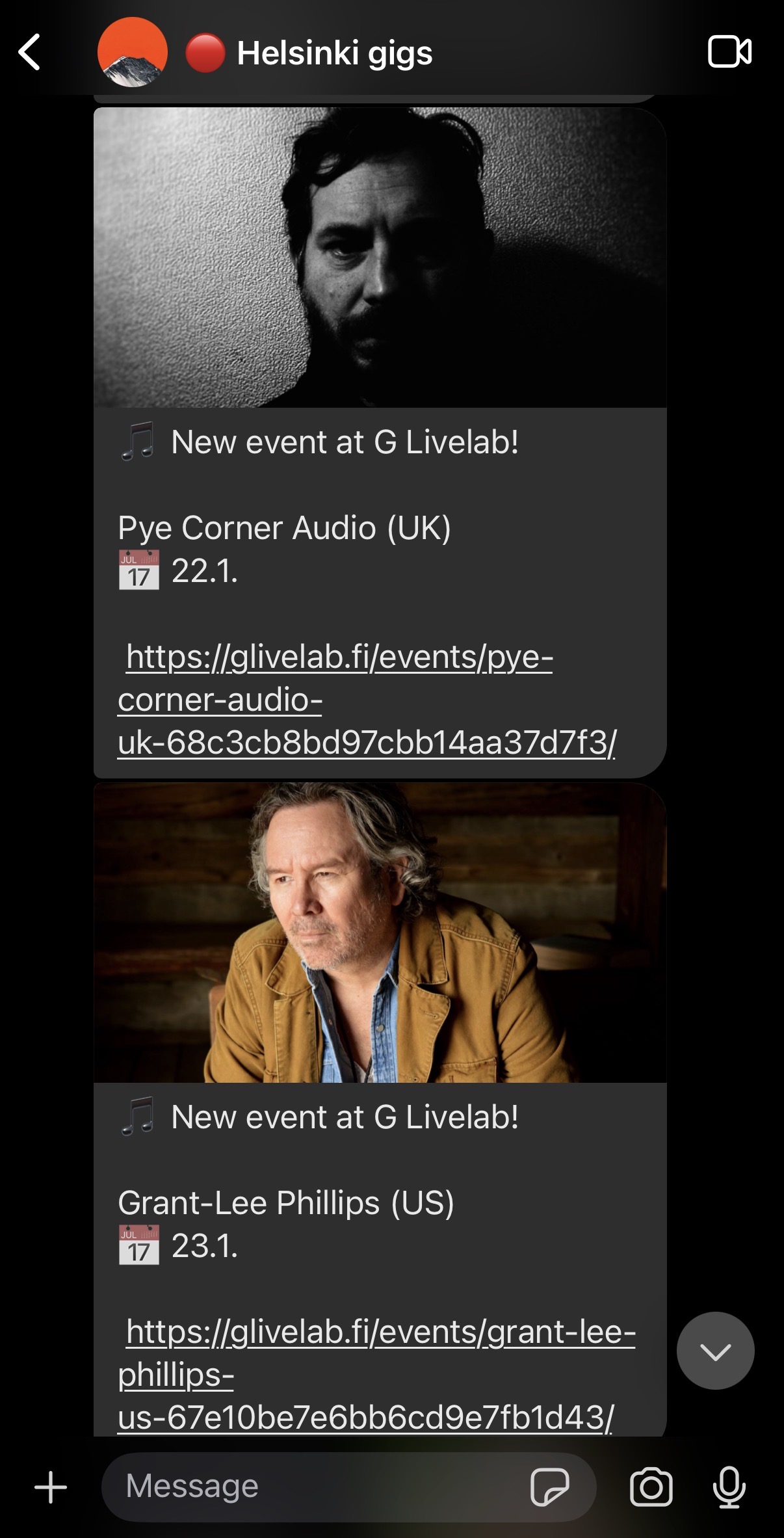

There's a handful of nice but pretty small concert venues in Helsinki, but I keep missing shows on the account of never checking out their sites. For example I just noticed the brilliant Anna von Hausswolff is coming in December, but that's just because I started building this project. So I thought, wouldn't it be nice to get notified whenever new gigs are announced. I didn't want to add to the noise and involve some new app on my phone, so I landed on Signal for the notifying. I'm already using Signal for messaging, and figured that what amounts to messaging myself wouldn't get too annoying. In short, I would build an automation that runs in the cloud and checks each of the venues I like for shows, and messages me when it finds new ones.

Here's what I'm working with:

- The cheapest kind of droplet in DigitalOcean (1 GB Memory / 25 GB Disk / AMS3 - Docker on Ubuntu 22.04), cost $6/month

- The n8n automation workflow builder running on that droplet

- signal-cli for sending messages on Signal

If you've seen low-code platforms, you'll know how n8n works. You build workflows in the visual UI, adding nodes and connecting them together. You can write code in JS or Python (I think) in nodes or run queries against databases, but the idea is to simplify the flow of data so that the whole process would be more approachable. Most of it is pretty straightforward, but this being my first time, there was some head scratching involved in passing all the needed data from one step to the next.

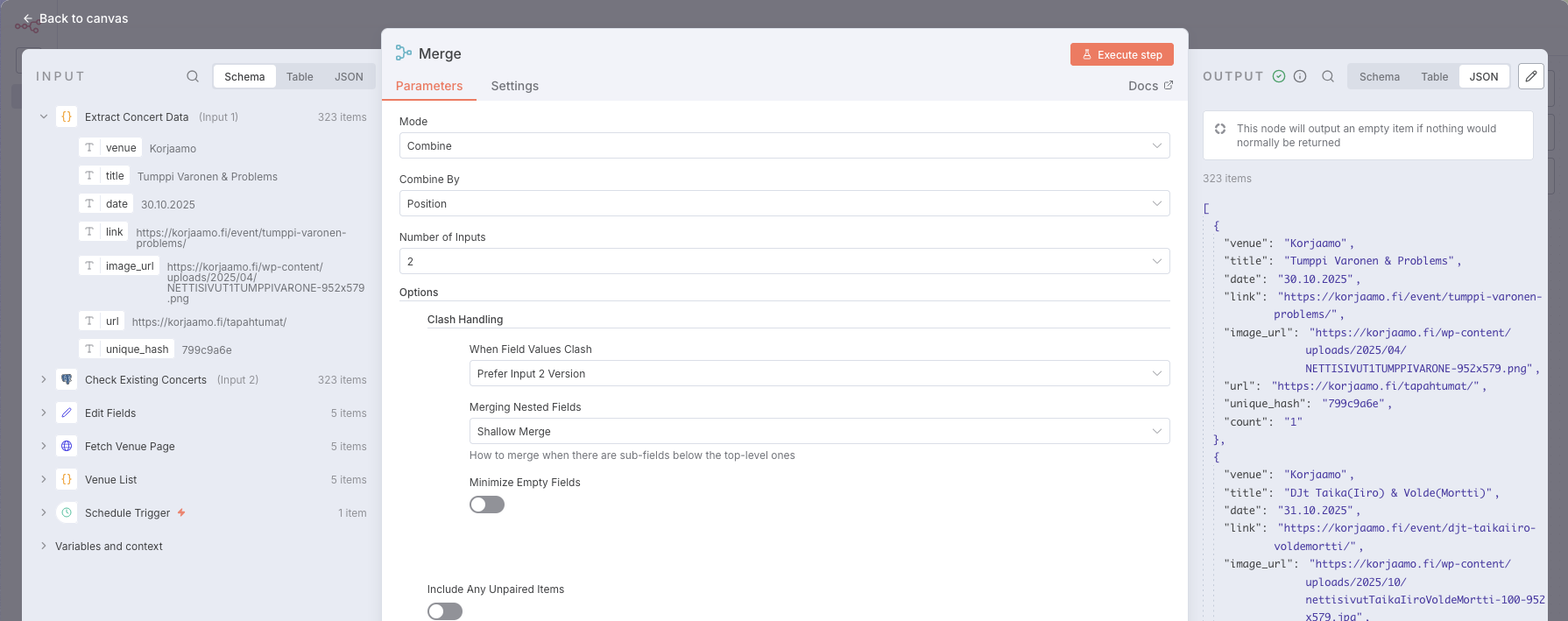

Looking back now, the workflow is pretty logical:

![]()

- Schedule Trigger starts the workflow every six hours

- Venue List contains the URL of each venue I want to track, as well as element selectors targeting the event structure on each page

- Fetch Venue Page just gets the HTML content on each of the concert listing pages

- Edit Fields is there to make sure all the needed info is passed on to the next step (combining the venue identifier with the page data)

- Extract Concert Data does the heavy lifting, trying different ways of capturing the event title, date, link, and image from the HTML, and maps them into a JSON object that it passes on, along with a hash it calculates from the data

- Check Existing concerts checks whether each event can be found in the Postgres database based on the hash

- Merge combines the result of the check with the data needed in the next step

- Is New Concert is just a binary switch that only lets through events that were not found in the database

- Construct Signal Message parses the data and sends a new message whenever a new event is found

- Save New Concert persists the hash and basic info of each event in the database.

I also have a couple of non-connected database nodes visible. These are not run on the schedule, and were mostly used for testing during development.

Using a low-code platform for vibin' worked ok-ish, but because the AI doesn't have direct visibility to the code in the system, a lot of copy-pasting was involved. Luckily, there are just two nodes in the workflow with code in them (the first one just contains JSON), and I felt Claude managed the context well. The small code view in the UI makes it difficult to handle longer code blocks, and I'll try to clean up the code in Cursor and see if it could be generalized and simplified. Right now it's using some pretty convoluted regexing for finding the right content on the pages.

The biggest annoyances were faced early on, with the AI suggesting packages for page scraping or hash calculation that the workflow didn't have access to. I tried installing one of them, but eventually settled on using vanilla JS. Claude also wanted to build very case-specific solutions to the tasks at hand, with no regard for future maintainability.

Other than that, the biggest disturbance was when I already thought I had everything set up properly, and the system re-sent a message for all the events in all venues every six hours, due to a relevant check being missing from the Signal messaging node. I thought I had gone viral when I saw the 640 new messages on my phone when waking up.

I created a dedicated channel for this in Signal, and set it so that only admins can post. I've yet to receive a valid update from the workflow, but I'm pretty confident that it now works as it should. Future improvements might include adding a dedicated virtual phone number for the bot, so that it could have its own identity on Signal and look more professional. It looks like this could be done with MySudo, but at this point I'm not ready to drop more money on this. The plan is to allow friends and family on the channel, and I don't mind much even if it looks like it's me who's posting each of the events.

All in all, this was a very fun project, and one I got to finish besides. It remains to be seen how useful it is in the end, but if it manages to keep me up to date on the events in my favourite venues, that's a win in my book.